Event Driven Architecture (EDA) - Optimizer or Complicator

This article shares real-world lessons from using tools like Kafka and AWS SNS, covering both the strengths of EDA and its common pitfalls.

Join the DZone community and get the full member experience.

Join For FreeAbstract

This article explores the practical realities of Event-Driven Architecture (EDA)—a paradigm often celebrated for its scalability, responsiveness, and flexibility in modern software systems. Drawing from years of hands-on experience with technologies like Kafka, RabbitMQ, and AWS SNS, I present a balanced view of EDA's benefits and pitfalls. The article delves into its core advantages, including loose coupling, asynchronous processing, real-time responsiveness, and extensibility, and aligns it with complementary approaches like Domain-Driven Design. At the same time, it sheds light on lesser-discussed challenges such as observability gaps, schema versioning, testing complexity, event duplication, and message sequencing. Real-world success stories from companies like Netflix and Walmart illustrate its potential, and I would also like to emphasize the importance of guardrails, error-handling patterns, and security best practices to build resilient systems. Ultimately, this article advocates for a thoughtful, problem-first approach to adopting EDA and highlighting that, while it can optimize systems at scale, it must be applied judiciously to avoid unnecessary complexities.

Introduction

Over the years I have used varied technologies and tools to build applications to meet customer or internal needs. I am a developer who has seen a transition from Titanic (water fall) to Jet Ski (agile), from Gantt charts to stand ups and from giant bricks (Monoliths) to tiny boxes (Microservice). We are always riding the wave of technology. Every time we move to something new or more modern, it's glorified. We put technology ahead of a problem sometimes.

I have been part of various implementations for years ranging from using Active MQ, Rabbit MQ, AWS- SNS to a more recent use of Apache Kafka. I have seen how well it blends in and helps build distributed systems. But not everything can be event driven and it has its own pitfalls. This is my effort to pen down my thoughts about the rush to move from a "Request driven architecture" to an "Event Driven architecture."

What Is Event Driven Architecture?

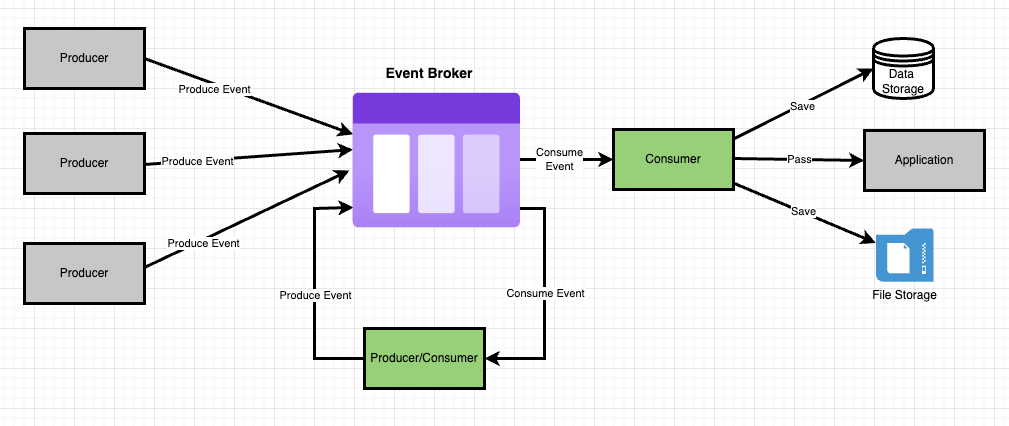

It is a software design pattern built around events. It allows systems to detect, process or react to real time events as they happen. It helps bind together complex and distributed systems and is always used in conjunction with other software architecture like micro services, domain driven design or the serverless architecture. It works best when used with technology/architecture which compliments it and brings in very less value when used alone.

There are also a lot of technologies and tools which support event driven systems and realtime processing. By decoupling services and using event brokers like Kafka, RabbitMQ, or AWS SNS/SQS, EDA ensures high availability, fault tolerance, and efficient handling of large-scale workloads.

Benefits of EDA

Asynchronous Processing

Tasks which take longer or do not have to give user immediate feedback can run in the background allowing the system to handle multiple tasks all at once in parallel. Since event producers and consumers are decoupled, systems can scale independently based on demand.The system doesn't wait for a response before moving on to the next task, reducing blocking and improving response times. Failures in one part of the system don’t necessarily bring down the entire application.

Loosely Coupled

Multiple systems can work together without knowing the internal complexities of each other. Because components are decoupled, you can modify or upgrade one part of the system without significantly affecting others. Testing components independently is easier in loosely coupled systems. Mocking or stubbing other services and components becomes straightforward, allowing for thorough and isolated tests. When issues arise, they are typically easier to isolate to specific components because of the loose dependencies. Different teams can work on separate components or services simultaneously without being blocked by dependencies on each other’s work. This speeds up development cycles and deployment

Highly Scalable

These are designed to scale up or down based on demand and traffic, which makes them a most sought out pattern these days. Asynchronous processing and loose coupling makes them highly scalable. Event brokers can be horizontally scaled to handle more events. By adding more nodes to the broker infrastructure, we can scale the event-handling capacity linearly, allowing for higher throughput. Cloud platforms provide services which automatically adjust resources based on demand, allowing us to handle large amounts of traffic without having to manually manage the scaling. This in turn allows considerable reduction in costs.

Real-Time Processing

Various systems can react to each other's events and bring a whole ecosystem together. One event can be consumed by one or more consumers and they could use it for varied operations or even decide not to act upon it. Real-time processing in Event-Driven Architecture enables systems to respond to changes as they happen, ensuring low-latency, high-throughput processing. This model is ideal for scenarios that require immediate action based on real-time data, such as fraud detection, real-time analytics, and user-facing applications.

Highly Extendible

Once an event is produced, there is no limit to how many systems will consume it for varied use cases. Consumers have all the liberty to pick and choose event data based on their use case. Since consumers can independently process different events, the overall system can handle much higher throughput compared to traditional request-response models. Once an event is generated there is no limit to how many consumers can consume nor there are any constraints on how that message gets used. Each consumer can process the same message, interpret and use parts/whole of the content as per its needs.

Supplements Domain Driven Architecture

Multiple domains coming together and working in tandem is a hard goal to achieve. If the domains just focus on their business logic, then event driven architecture can work beautifully to integrate various domains together. Event-Driven Architecture (EDA) aligns perfectly with Domain-Driven Design (DDD), especially in large, complex systems where services need to evolve independently while maintaining business integrity. EDA enhances DDD by providing a flexible, scalable, and asynchronous way to handle communication between bounded contexts, ensuring that domain events are propagated efficiently while keeping services loosely coupled and autonomous.

Success Stories

There are no dearth of success stories for event driven architecture. Giant companies like Uber, Netflix, Walmart and LinkedIn to mid-size companies or startups all have used it and reaped benefits. Event driven architecture has become a core architectural choice for modern software enterprises.

This is a good read on how Netflix picked a combination of both push and pull mechanisms for their events. It's an example of one event flow, multiple consumption patterns (Push or Pull), at massive scale, with resilience and flexibility built-in.

Walmart uses Kafka for its real time replenishment use case. Walmart moved from reactive, slow replenishment to a proactive, real-time supply chain powered by Event-Driven Architecture.

Kafka enabled a scalable, decoupled, and resilient backbone.

Hidden Pitfalls

There are always unspoken challenges with using event driven architecture which can make the development and maintenance very complex. More common ones are listed below.

Harder Observability

As events pave the way to a distributed system, consolidating all the logs will be a pain point. There is no centralized dashboard available by default. Some system failures can go unnoticed which could have a cascading effect.

Versioning Schema

Development phase goes well with events. Once in production, if there are changes needed to the schema, like adding or removing fields, then the changes have to be carefully investigated, made backward compatible and a good regression suite has to be built.

Complexity in Testing

Developer testing like unit-testing can be straightforward. But mimicking scenarios with systems going offline, end-to-end testing, performance testing can be quite tedious.

Event Spamming

Events lacking enough details can be useless. Like in case of logs, too many events can cause nothing more than havoc for a developer to debug.

Message Broker

There is a tight constraint to use a message broker like Kafka or Active MQ. You could have a self-managed solution or buy a platform which comes with a price and learning curve.

Deduplication

- Events being replayed because of a faulty error handling

- Producers accidentally sending more than one event

- No standard unique ID mechanism

Sequencing messages

Sometimes, to meet the business requirements, there is a need for sequencing messages from a single business entity. Various brokers have different ways to make it happen which is not always a default behavior and teams have to handle it based on their business or design needs.

Best Practices for Resilience

A resilient event driven system ensures the system is scalable, efficient, maintainable and most importantly fault tolerant. Some suggestions to make a system very resilient are below:

Schema Versioning and Validation

Validating the schema at the Client, Broker or Consumer end can be a good choice to solve this to avoid exceptions which can bring event processing to a halt. A good schema versioning pattern can come a long way to make sure no regressions are caused. A new consumer should be able to process old events and old consumers should be able to ignore new fields.

Use Persistent Event Storage

Having a good persistence mechanism at the broker's end to make sure crashes, exceptions and service restarts do not cause data loss which can cause catastrophic production issues is very important. Using an immutable/Log with longer TTL(Time to live) is one good approach which will allow for replays. Like Postgres, DocumentDB can be a good option for data storage depending on your needs.

Idempotency and Unique IDs

- Assigning unique IDs, best done at the producer side will be beneficial.

- Having unique constraints if a database is involved.

- Having a good consumer event acknowledgement mechanism can help in this regard.

- Having deduplication at the broker is a very powerful mechanism too. E.g. Kafka has exactly once processing mode (EOS mode) which can help prevent duplication.

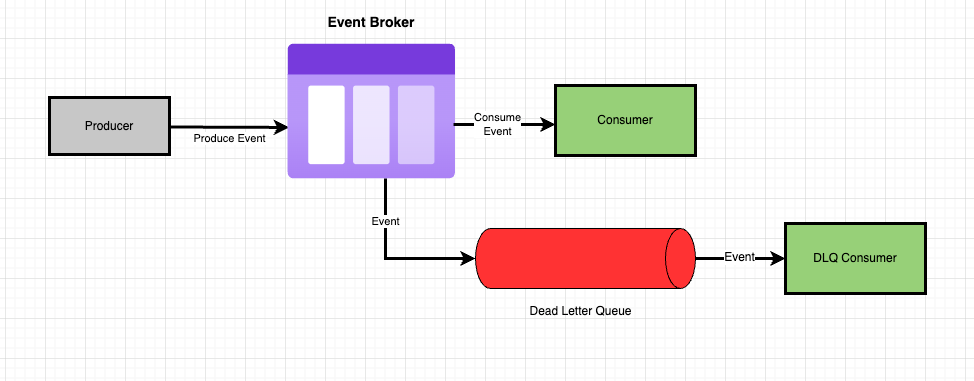

Error Handling

Some of the highly powerful error handling patterns hold true for events true like the following:

-

Dead Letter Queue (DLQ) - This will help keep problematic messages away and allows for manual intervention or analysis later. These messages can be retried or logged or used to generate alarms/alerts.

-

Circuit Breaker Pattern - If a service is failing, then further events to that consumer can be stopped with this pattern. This avoids tension on the system and avoids cascading failure while maintaining high system availability.

Observability

With the system being distributed, special consideration and thought has to be given to consolidate and monitor logs, setup alerts, metrics collection and performance monitoring. Tools like Data Dog, Grafana, Splunk or New Relic come to the rescue but with a heavy price.

Security Considerations

By applying some security best practices, an Event driven architecture can remain robust against cyber threats, ensures compliance (GDPR, PCI-DSS, HIPAA), and maintains data integrity across distributed systems.

DDoS & Rate Limiting – Implement rate limits and throttling on event producers and consumers to prevent abuse and DDOS attacks.

Message Broker Security – Secure Kafka, RabbitMQ, or AWS SNS/SQS by configuring ACLs, enabling SSL, and restricting public access to prevent unauthorized event publishing or consumption.

Data Encryption – Encrypt events in transit (TLS) and at rest (AES-256) to protect sensitive information from interception or leaks.

Authentication & Authorization – Use OAuth 2.0, JWT, or API keys to ensure that only authorized producers and consumers can publish or subscribe to events. Role-Based Access Control (RBAC) or Attribute-Based Access Control (ABAC) can also be implemented for fine-grained permissions.

Other Considerations

- Throttling - Slowing down the processing when the system is already stressed is Pivotal. Broker level throttling can help handle this and so can consumers.

- Retries - Exponential Backoff, setting Min and Max retry counts at the consumer, Retry topics could be some mechanisms to follow.

- Rate limiting - Will help systems to protect themselves from attacks or accidental circumstances.

- Auto Scaling - Adjusting the consumers based on the traffic load can be done. This will expand the number of pods at heavy traffic and reduce the pods when the traffic reduces.

Conclusion - A Balanced Approach

I am not pontificating to use synchronous architecture, I have seen how scalable and extensible event driven architecture has made our systems. But if not done right, it can be a maintenance havoc for an organization. Some key takeaways I would like to focus on:

- Not everything has to be solved via event driven patterns.

- Simple use cases have a lot of tried and tested alternatives. For simple use cases, which can be solved with some microservices and frontend technology, bringing in an event driven architecture might be an overkill.

- There is no "one size fits all"—understand your business requirements, scaling needs, NFR’s (Non Functional Requirements).

- Event driven architecture and right guardrails around it go hand in hand.

- Event driven architecture is not complex, but it just exposes all the complexity which we did not have to solve or were hidden in a monolith.

- Getting event driven architecture right the first time might not be the case for most of us. Tweaking, learning as we go, and making modifications will reap results.

- Wherever possible, a hybrid system might be a good pattern to follow.

- As we look at a brighter future with AI and more robust new technologies, I think the first question to ask will always be “What is the problem we are trying to solve and why?”

Opinions expressed by DZone contributors are their own.

Comments